Nonparametric Curve Estimation by Kernel Smoothers: Efficiency of Unbiased Risk Estimate and GCV Selectors

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

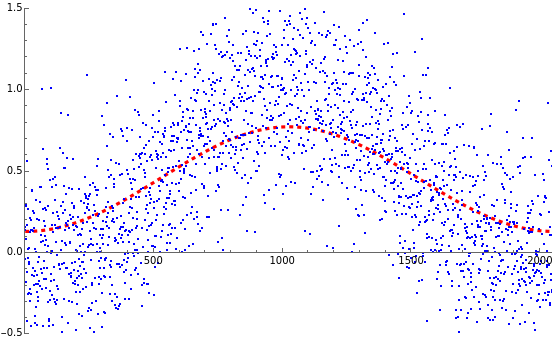

This Demonstration considers one of the simplest nonparametric‐regression problems: let  be a smooth real‐valued function over the interval

be a smooth real‐valued function over the interval  ; recover (or estimate)

; recover (or estimate)  when one only knows

when one only knows  approximate values

approximate values  for

for  that satisfy the model

that satisfy the model  , where

, where  and the

and the  are independent, standard normal random variables. Sometimes one assumes that the noise level

are independent, standard normal random variables. Sometimes one assumes that the noise level  is known. Such a curve estimation problem is also called a signal denoising problem.

is known. Such a curve estimation problem is also called a signal denoising problem.

Contributed by: Didier A. Girard (January 2013)

(CNRS-LJK and University Joseph Fourier, Grenoble)

Open content licensed under CC BY-NC-SA

Snapshots

Details

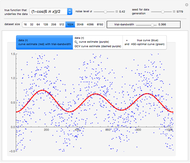

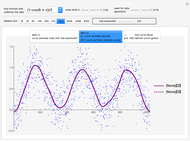

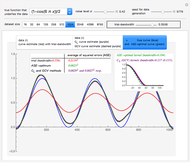

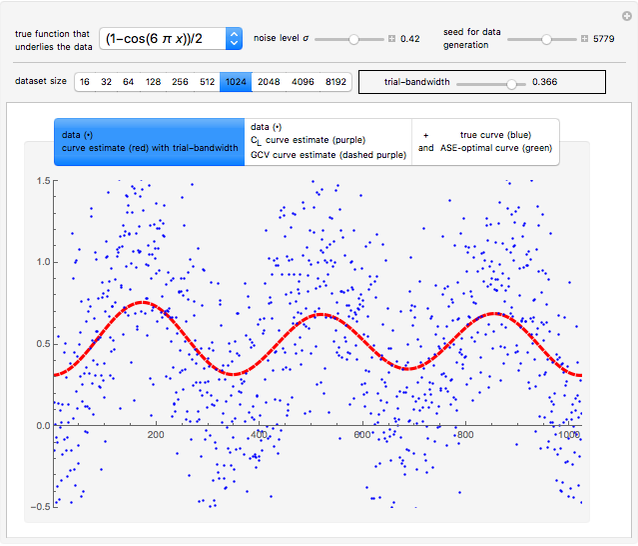

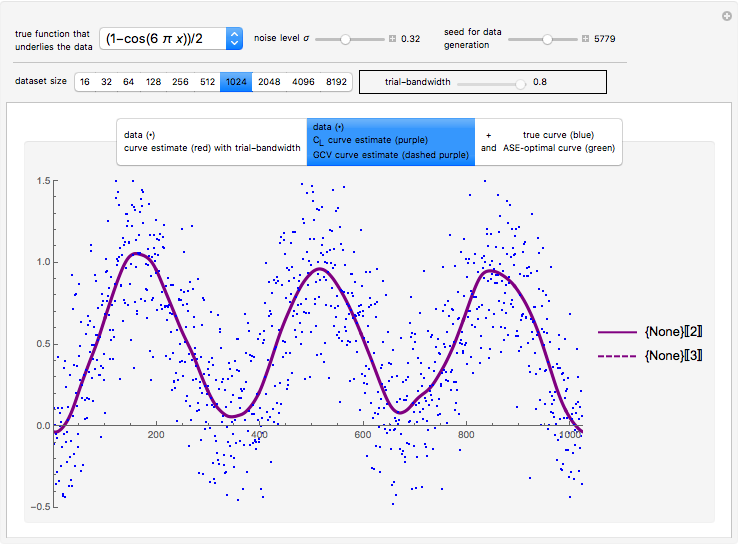

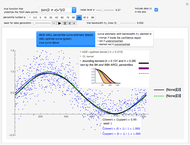

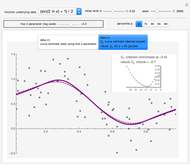

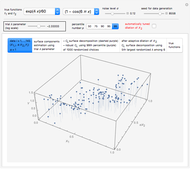

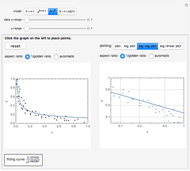

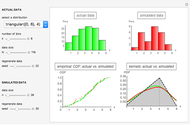

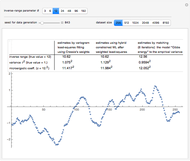

The three snapshots above are respectively the three views of the results obtained when analysing a fixed data set of size 1024.

The kernel estimation method for nonparametric regression is a particular case of the Loess method mentioned in Ian McLeod's Demonstration, How Loess Works.

The case of local constant fit and a homogeneous (in space) amount of smoothing is considered here.

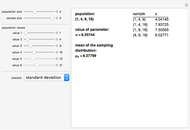

The kernels used here are from the class of biweight kernels that resembles the class (when  varies) of the densities of the normal (Gaussian) distribution with mean

varies) of the densities of the normal (Gaussian) distribution with mean  and standard deviation

and standard deviation  , except that a biweight kernel has compact support, with the length of support being the kernel bandwidth, also denoted

, except that a biweight kernel has compact support, with the length of support being the kernel bandwidth, also denoted  . Each candidate among the curve estimates needs to be evaluated only at the equispaced points

. Each candidate among the curve estimates needs to be evaluated only at the equispaced points  ,

,  . The computation of such a candidate is simply and quickly provided by the built-in Mathematica function ListConvolve.

. The computation of such a candidate is simply and quickly provided by the built-in Mathematica function ListConvolve.

We restrict ourselves to sizes  that are powers of

that are powers of  : all the invoked discretized kernel functions can then be simply and quickly obtained by first precomputing the required kernel values for the case

: all the invoked discretized kernel functions can then be simply and quickly obtained by first precomputing the required kernel values for the case  equal to its largest possible value and for bandwidths in a fixed fine grid of size 401 (in the Initialization step) and then by appropriately subsampling this precomputed Table.

equal to its largest possible value and for bandwidths in a fixed fine grid of size 401 (in the Initialization step) and then by appropriately subsampling this precomputed Table.

References

[1] G. Wahba, "(Smoothing) Splines in Nonparametric Regression," Technical Report No. 1024, Department of Statistics, University of Wisconsin, Madison, WI, 2000 (Prepared for the Encyclopedia of Environmetrics) http://www.stat.wisc.edu/wahba/ftp1/environ.ps

[2] G. Golub, M. Heath, and G. Wahba, "Generalized Cross Validation as a Method for Choosing a Good Ridge Parameter," Technometrics 21(2), 1979 pp. 215–224. http://www.jstor.org/stable/1268518.

[3] D. A. Girard, "Asymptotic Comparison of (Partial) Cross‐Validation, GCV and Randomized GCV in Nonparametric Regression," The Annals of Statistics, 26(1), 1998, pp. 315–334. doi:10.1214/aos/1030563988. http://projecteuclid.org/euclid.aos/1030563988

Permanent Citation